If AI policy has felt like a moving target, today’s news is a rare moment of clarity. California’s Civil Rights Department finalised regulations to curb discriminatory impacts from AI and automated decision-making in the workplace, with transparency requirements built in. The rules take effect on October 1. That is not far off. That is now.

There is more. California advanced some of the most sweeping AI safety legislation in the country. After a messy draft that would have lumped college students in with frontier labs, lawmakers recalibrated. The new regime targets companies with $500M+ in revenue and the developers of truly large models. Students and small builders can still learn and experiment without enterprise-grade burdens.

For those of us who have been banging the drum on AI safety for a long time, this is a win. Not a victory lap, but proof that evidence, expert input, and well-designed governance can beat hype.

WHAT ACTUALLY CHANGED, IN PLAIN ENGLISH

1) Transparency is not optional. Employers cannot deploy automated decision systems for hiring, promotion, or similar people decisions unless the systems are transparent and explainable.

2) Scope that makes sense. The revised bill avoids crushing students and small teams. It applies to companies at genuine scale and to frontier model developers with the means to train the largest systems.

3) Evidence over vibes. An earlier bill was vetoed to allow time for a substantive expert report. That report landed with what policy often lacks: checklists, frameworks, and procedures that security and compliance leaders can actually use.

4) Whistleblower protections, with caveats. Protections exist, which is good. They are not body armour. The point is to encourage early, honest reporting of harms without turning every internal thread into a legal saga.

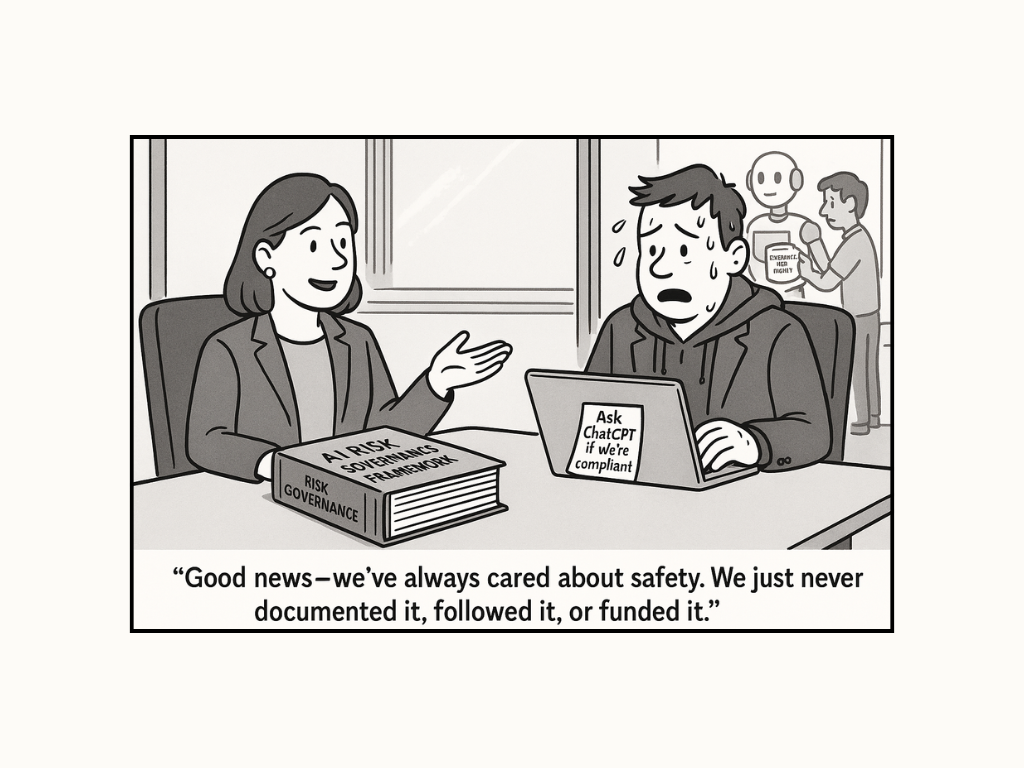

5) Duties on catastrophic risk. Firms must assess, manage, and disclose catastrophic safety incidents, and they must not misrepresent them. Quietly dissolving your alignment team is not a viable strategy.

6) A public reporting channel. California’s Office of Emergency Services will provide a place for the public and insiders to report critical AI safety incidents. The challenge will be separating signal from noise, since language models can flood any inbox.

WHY THIS MATTERS FOR LEADERS

This is not just compliance. It is operational maturity for AI.

- Governance gets real. You will need documented risk assessments, model cards, evaluation protocols, human-in-the-loop controls, and escalation paths.

- Accountability shifts left. Safety, legal, security, HR, and product must co-own outcomes from design to deployment, not only after a headline.

- Trust becomes a moat. Transparent practices reduce legal exposure and raise credibility with employees, applicants, and customers.

- Talent implications. Vendor labels do not remove responsibility. If a tool influences people decisions, your name is still on the door.

A QUICK PRIMER ON AI SAFETY INCIDENTS

Leaders often ask what counts. Here are common patterns:

- Discriminatory outcomes in hiring, promotion, pay, scheduling, or termination, produced or assisted by automated tools.

- Model misbehavior with real-world stakes, such as fabrication in safety-critical contexts or bypasses of safeguards that enable harm.

- Process failures, including removing or underfunding alignment or safety teams, ignoring known risks, or failing to monitor drift and red-team findings.

- Breach-like scenarios that expose sensitive training data or produce outputs that enable material misuse.

If you cannot spot, triage, and report these quickly, regulation will expose that gap for you.

YOUR 30-DAY ACTION PLAN

Week 1: Inventory and ownership

- List every automated decision system that touches people decisions.

- Assign named accountable owners across Legal, HR, Security, and Product.

Week 2: Minimum viable governance

- Stand up a lightweight Safety Review: intake form, risk rubric, and sign-off.

- Document data sources, model versions, evaluation metrics, and known limitations.

Week 3: Bias and robustness checks

- Run baseline fairness audits pre-deployment, in deployment, and post-deployment.

- Add human-in-the-loop checkpoints for high-impact decisions.

Week 4: Incident readiness

- Define what a material incident is, plus escalation paths, whistleblower intake, and public reporting procedures.

- Tabletop a simulated incident. If it feels chaotic, the plan is not ready.

THE BIGGER PICTURE

California’s move signals a shift from innovation at all costs to innovation with guardrails. It does not punish learning. It targets scale and risk. It asks leaders to prove they can build powerful systems without leaving people behind. That is not red tape. That is leadership.

If you are an executive wondering where to start, consider this your nudge. The hard part is not the paperwork. It is the culture that makes safety, ethics, and inclusion part of how you ship.

FOR C-SUITES READY TO LEAD

Lead your organisation into the AI era. Our mission is to guide senior leaders through AI disruption with a human-centric lens. The secret sauce is world-class leadership psychology paired with deep technical mastery. Relationship-first beats transaction-first when change gets real.

- Executive Insights: a 1-hour C-suite briefing on risk, ROI, and readiness.

- Strategic Momentum Workshop: a half-day on prompt engineering, 80/20 oversight, and governance guardrails.

- Transformation Masterclass: a full-day roadmap for ethics, measurable impact, and compliance-by-design.

- Ongoing Coaching and Micro-Labs: continuous capability building and decision support.

Reflection prompts for your next exec meeting:

- Where does AI currently influence people decisions in our organisation?

- If a safety incident landed tomorrow, could we detect it, explain it, and respond within 48 hours?

- What would it take to convert compliance into a competitive advantage this quarter?

Build boldly, and build safely.

.png)

.png)