Generative AI has rapidly evolved from a fascinating curiosity into a groundbreaking force shaping how we create, communicate, and collaborate. This AI era—defined by the ability of machines to produce imaginative, coherent, and compelling content ranging from whimsical stories to practical programming code—is both thrilling and deeply challenging.

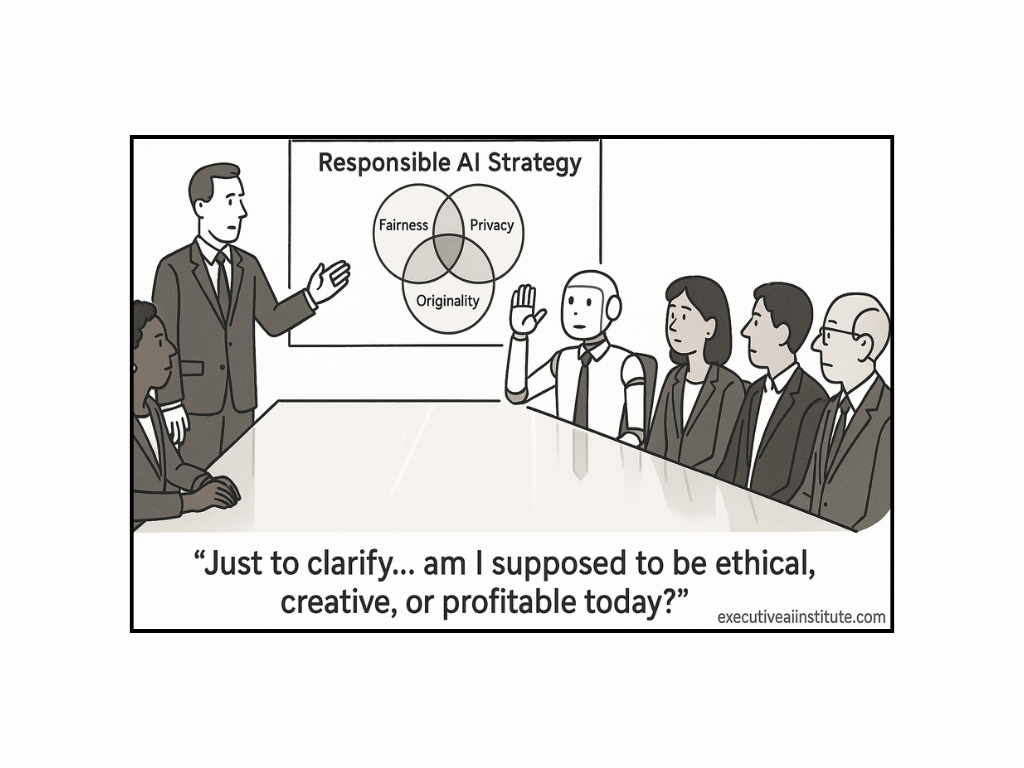

So, while generative AI might write poems that dazzle, stories that engage, and even code snippets that simplify our lives, its rise poses fresh questions around fairness, toxicity, intellectual property, and more.

But fear not—although these challenges are significant, thoughtful solutions are emerging.

Let’s unpack the essentials of responsible AI in this generative era, discovering both pitfalls and pathways forward.

UNDERSTANDING GENERATIVE AI: A BRIEF PRIMER

Generative AI models—such as large language models (LLMs)—work by predicting the next logical step from vast troves of data. Think of it like having a billion-book library and instantly drawing from that knowledge to craft the perfect sentence. Unlike traditional AI that solves narrow problems (like deciding loan eligibility), generative AI thrives in open-ended creativity, building something new each time it runs.

Sounds fantastic, right? Indeed. But this boundless creativity brings unique concerns.

THE NEW CHALLENGES: MORE THAN A TECHNICAL PUZZLE

Here’s the catch: generative AI's strength—its creativity—is also its Achilles’ heel when it comes to responsible use.

Here’s why:

1. Fairness Becomes Murky

When AI is making straightforward decisions (like loan applications), fairness might be defined clearly (e.g., equal outcomes across genders). But what happens when AI crafts stories or artwork? Should it evenly distribute pronouns, professions, or positive descriptors across all demographics? Who defines what's fair in art or storytelling, and where do we draw the line?

2. Privacy Gets Complicated

Generative AI might unintentionally leak subtle private details by closely mirroring its training data—like slightly modifying proprietary code or echoing personal anecdotes. Protecting privacy in generative contexts requires sophisticated solutions that move beyond simple data filtering.

3. Toxicity and Censorship: The Blurry Line

Determining what's offensive or harmful is inherently subjective. What may seem like harmless satire to one group could feel deeply hurtful to another. Guardrails must balance avoiding harmful content without suppressing important or genuine expression.

4. Intellectual Property and Creative Mimicry

Generative AI models can create art “in the style” of famous artists, raising thorny questions about originality and ownership. Is mimicking Warhol inspiration or infringement?

5. Hallucinations and Accuracy

Generative AI can confidently make up facts—known as “hallucinations”—which sound plausible but are false. Imagine relying on an AI-generated financial news article that’s entirely fictional but convincing.

6. Ethical Implications in Education and Work

With students and professionals using AI to write essays or complete tasks, verifying authenticity becomes tricky. Will AI lead to widespread cheating, or is it simply another tool that educators and employers must adapt to?

NAVIGATING SOLUTIONS: STEPS TOWARDS RESPONSIBLE AI

The good news? Practical solutions are actively being developed and refined. Here’s how we can steer generative AI toward safer, fairer, and more ethical outcomes:

1. Data Curation and Guardrails

By carefully curating training data, developers can prevent obvious bias and offensive language. Pair this with guardrail models—tools specifically trained to identify and filter inappropriate content—to enhance protections against toxicity.

2. Enhanced Transparency and User Education

Educating users about AI’s capabilities—and limitations—is vital. Clear disclaimers about AI-generated content and proactive user training can manage expectations and prevent harmful misuse.

3. Improving Accuracy and Attribution

Addressing hallucinations involves linking AI-generated content to verified databases and external sources, ensuring factual accuracy. Techniques like watermarking content or creating digital fingerprints can reliably identify AI-generated content, aiding in transparency and accountability.

4. Legal, Policy, and Ethical Frameworks

Emerging fields like differential privacy or "model disgorgement"—where protected content's influence is systematically minimised—offer promising pathways to address intellectual property concerns. Legal clarity and policy frameworks will further solidify responsible AI standards.

5. Shaping the Nature of Work

Rather than fearing replacement by AI, professions should proactively adapt to integrate AI into workflows, enabling higher productivity and perhaps even creating entirely new roles (hello, prompt engineers!).

PRACTICAL TAKEAWAYS FOR LEADERS

As you lead your organisation into the AI era, keep these key insights in mind:

- Anticipate ambiguity: Embrace the uncertainty of generative AI, continuously refining your policies as the technology evolves.

- Invest in responsible AI training: Educate your teams on ethical AI use, setting clear expectations around fairness, accuracy, and accountability.

- Prioritise transparency: Clearly communicate when AI-generated content is being used, fostering trust with customers and employees alike.

- Foster strategic dialogues: Regularly engage your leadership team in conversations about responsible AI to proactively address emerging challenges and opportunities.

EMBRACING GENERATIVE AI RESPONSIBLY

Generative AI is revolutionary, and its potential is extraordinary—both for creative expression and for practical business innovation. But this potential must be thoughtfully managed.

Remember, responsible AI isn't just about technology; it's about fostering trust, ethical clarity, and inclusive leadership. It's about thoughtfully shaping the human relationship with technology, ensuring it serves and enriches us, rather than controls or harms.

Ready to explore deeper into responsible AI and its strategic implications for your organisation? It’s a conversation worth having, and the time is now.

READY TO CONFIDENTLY GUIDE YOUR ORGANISATION INTO THE AI ERA?

Schedule your Executive Insights Briefing today, and discover how you can harness AI strategically—ethically, responsibly, and profitably.

Because great leadership is human-first, even in an AI-driven world.

.png)

.png)